Controlling actual disinformation without injecting bias or stifling free speech isn’t easy. It’s amazing what a couple of days and $44 billion can do. When I saw that an anti-gun post on Twitter had been fact-checked, it was clear that they were trying something new that might also actually be fair.

The Challenge: Control Actual Disinformation While Respecting Freedom of Speech

It would be wildly inaccurate to say that Twitter’s approach to fact-checking has been fair or remotely even-handed. In my experience, they primarily did whatever they could to defend the establishment, “approved” narratives and, whenever possible, the mainstream authoritarian left.

Meanwhile, big anti-gun accounts sharing disinformation largely got to continue making baseless claims against firearms ownership. Even if every one of Twitter’s fact-checks was true and accurate (I’ll leave that one up to readers’ imaginations), the fact that they were unevenly applied is what indisputably made them biased.

On the other hand, one of my concerns with Elon Musk’s recent purchase of Twitter is that he’d create a free-for-all environment with nothing to stop the flow of intentional disinformation. Combined with the mute and block functions, we’d pretty quickly divide many Twitter users into neat echo chambers where they get a steady diet of disinformation from people with an axe to grind instead of gaining better knowledge through enlightened free speech.

At best, that would have a negative effect on politics and discourse. At worst, it could lead to IRL violence as people disconnect further from reality and start acting on imaginary threats and internet rumors, much like the Pizzagate shooter.

Perhaps worse, rampant disinformation would allow agents of authoritarian states (such as the People’s Republic of China and their two-million-strong army of “wumao”/網評猿 propagandists) to inject bad information into our political discourse. This could put our country at a disadvantage to them at the worst possible time, and is a very real risk to national security.

Given the risks, this is a problem any responsible social media operator must consider and deal with. But fair and even-handed moderation and fact-checking can’t really happen in cubicles at some corporate headquarters.

For one, there just isn’t enough manpower to address the volume of mis- and disinformation that bad actors like the Chinese government and anti-gun organizations are pumping into it. The other issue is that corporate culture tends to create its own sets of biases, leading to eager fact-checkers who push leftward to impress their likeminded bosses.

Flagging An Anti-Gun Lie

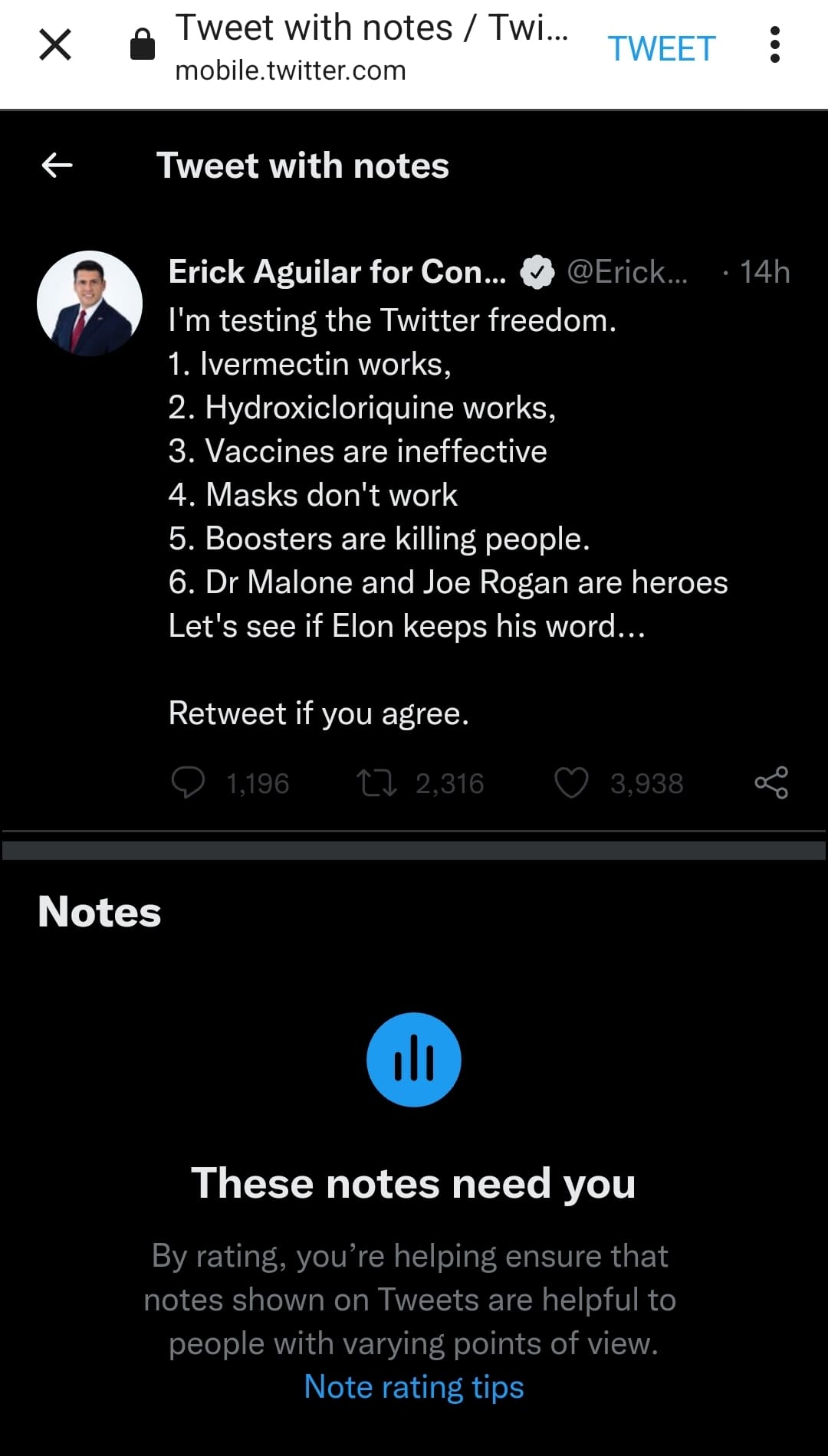

OK, maybe not a lie. You can probably chalk up the following example to a Brit’s blind ignorance. Anyway, yesterday Twitter selected me for a trial view of Birdwatch. I found this out when I saw a notice below an anti-gun tweet that set the facts straight:

Instead of “the unquestionable fact-checkers thus sayeth,” it was clear that this new fact-checking system is driven by average users. Instead of taking a hard position, the notice says “Readers added context they thought people might want to know.”

The simple notice under the tweet above states that the guns pictured are, in fact, BB guns, and aren’t regulated like firearms because they have a low risk of injury. Something most Americans would know, but apparently not a hapless BBC “journalist.” Links to back up both assertions were included, too.

Unlike past fact-checking efforts, Birdwatch doesn’t rely on the word and opinion of people working at Twitter HQ. Instead, it allows normal users to flag disinformation and provide context. Then, their “note” goes into a moderation system that’s also driven by users.

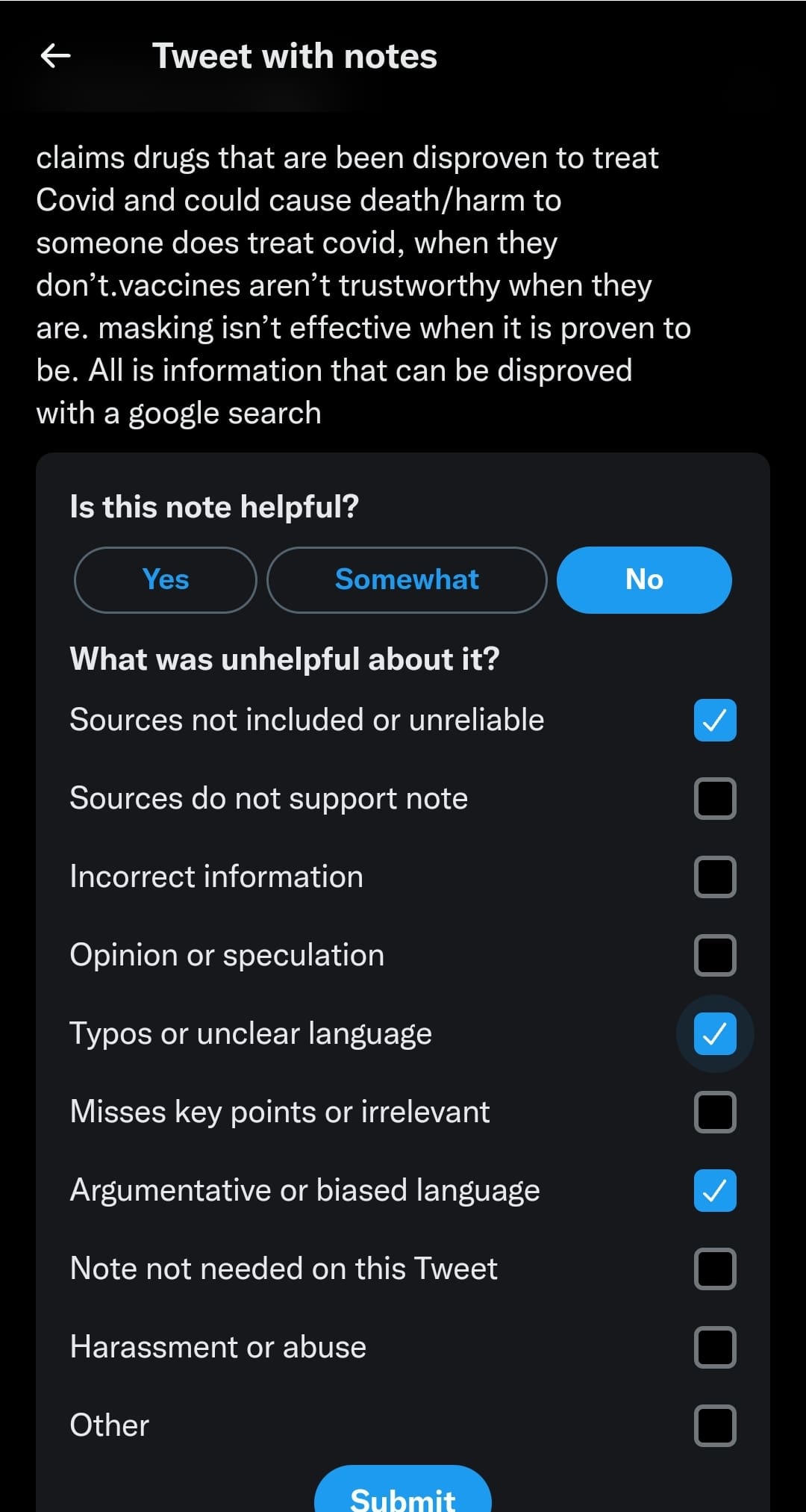

Here’s what that system looks like:

It shows you tweets that someone has flagged with a note and asks you to rate the notes.

It asks you whether each not is helpful, and you can answer “Yes”, “No”, or “Somewhat”.

It then asks you to explain why you thought the fact-checking or context note was or wasn’t helpful. In this case, I chose “No” because it provides no sources, uses biased language, and is poorly worded.

To deal with people who approve or disapprove of notes based on their own ideology or tribal affiliation rather than objective criteria, Twitter doesn’t rely on the word of one reviewer. To actually appear below a tweet, a comment needs a number of positive votes from people across the ideological spectrum (presumably based on past tweets). So, good tweets aren’t going to get a note unless there’s a broader consensus that something is wrong.

Perhaps more importantly, the offending tweet doesn’t get removed. The person sharing disinformation gets their freedom of speech, while their followers get to decide for themselves whether the person was sharing faulty information or trying to deceive people, and they get information broadly found useful to help them make their decision.

While no system is perfect, I think this puts the power of fact-checking back where it belongs…in the hands of normal platform users. It also gives us a fighting chance against all kinds of disinformation and not just things the left objects to.

How To Help Fact-Check Anti-Gun Propaganda

This system predates Musk’s purchase of Twitter, but it does appear that they’re letting more people into it since he made the sale. So, we can’t be sure whether he’ll keep it or ask for further improvements. But if they do decide to keep it, we could end up with a system that puts us all on a level free speech playing field rather than one that’s tilted to one side.

If you want to learn more about it and contribute to rating these context notes, you can check it out yourself here.

I stopped at, “The Challenge: Control Actual Disinformation While Respecting Freedom of Speech”.

There should be no, absolutely no controlling disinformation. Free speech is free, or it isn’t. Protecting people from themselves is not “free speech”.

Not even sure “slander” and “libel” should be sanctioned.

The original idea of making online conversations immune from from legal action due to what is posted did not include curating so as to protect people from the world.

The idea was to treat internet conversation the same as the electric companies who provide the power to run websites; electric companies cannot be made liable for people who use electricity in the commission of a crime. Then, somehow, internet forums became responsible for “policing” websites to eliminate “extreme” language and ideas. Doesn’t matter which “side” is policing; both are dangerous at the task.

Sam,

Yup. When the author wrote that Twitter should continue to control disinformation, I became suspicious that the author is a lib in conservative clothing.

As for his rating system, it will not take long before campaigns are organized to promote a Tweet or Tweeter. The data will be useless. Especially if Twitter-bots are not eliminated.

It is hard to stomach that some crazy bigot could go on a free speech platform and advocate post-birth abortion (otherwise known as infanticide), but free speech is free speech and that includes the freedom to be monsterously ugly.

All of that being said, the freedom to publish illegal material, such as kiddie porn, is not free speech. The issue there is all the activities governments have a penchant for criminalizing. Example: If I Tweet that I used the veterinary form of Ivermectin to cure my Wuhan flu, and add ” you should try it”, well, here comes the FBI Swat team, ready to kick in my doors and seize my property for practicing medicine without a license.

Doesn’t matter who does the censorship, it is all a matter of who is deciding what is to be censored. That leads to “might makes right”.

As to content that violates federal law, the point is that illegal remains illegal, and action can be taken against the offender….after a violation (just like with any law). It is the desire to censor without liability that is just wrong.

If Bezos should become the only “news” outlet in the country, I would make the same arguments about squelching “free speech”. Given the incestuous relationship between the Biden admin, and the colossal digital forums (Biden’s people are telling the big social media sites to eliminate “misinformation”), the colossal digital forums are de facto agents of government. Bezos would be no different.

When speech in the digital public square is controlled by money, the “public square” is no longer “public”.

Sam,

Speaking of free speech, my detailed comment to you is awaiting moderation.

Thanx for the head’s up; looking forward to it.

Cheers,

Lol…

Hey Lifesavor, sadly it may never reappear.

Hope you made a copy before posting. If so try dividing it up and reposting in smaller parts. It looks messy but it appears, for whatever reason, that is the way this site moderates, by length of the post.

Only people who need to fact check the facts about Gun Control are politically inept history illiterates. The former rulers of twitter fit that mold so they can fact check the following until the cows come home…

1) The Second Amendment is one thing.

2) The criminal misuse of firearms, bricks, bats, knives, vehicles, etc. is another thing.

3) History Confirms Gun Control in any shape, matter or form is a racist and nazi based Thing.

“There should be no, absolutely no controlling disinformation. Free speech is free, or it isn’t. Protecting people from themselves is not “free speech”.”

Agreed.

Look what’s breaking news :

“BREAKING: Biden administration creates ‘Disinformation Governance Board’ under DHS to fight ‘misinformation'”

https://thepostmillennial.com/breaking-biden-administration-creates-disinformation-governance-board-under-dhs-to-fight-misinformation

That verifies it, Musk has them in full-panic mode, and they are losing their shit, *Big-Time*… 🙂

“Look what’s breaking news :

“BREAKING: Biden administration creates ‘Disinformation Governance Board’ under DHS to fight ‘misinformation’” ”

Thanx for grabbing that link.

The ‘Ministry of Truth’, indeed.

Watching that blow up will be *highly* entertaining… 🙂

Haha, yep.

I’m glad Musk is doing what he’s doing, and I agree that an ISP (a provider of something that has become a necessity) should be similar to a utility, but it’s important to differentiate between those and social media pages. I have yet to see any bad examples from actual ISPs.

.”…but it’s important to differentiate between those and social media pages.”

Not actually. The social media are benefited by not being regulated as a publisher. However, social media sites depend on the electricity of the internet, the power needed to survive. As such, social media should be considered a utility. In that light, they can favor no agenda.

If it is imperative that people using the internet be protected from themselves, then content curation by social media hosts should be required to be open and transparent, not intellectual property/trade secrets that can be protected from scrutiny.

Whenever “free speech” is infringed, for any reason, corruption abounds and prospers.

Dependence on a utility does not make you a utility. Cabela’s.com depends on the internet; are they obliged to disseminate my opinions? Machine shops depend on electricity, but that doesn’t oblige them to build from plans you send them.

True, but an infringement is clearly defined as “Congress mak[ing a] law”. Private citizens not gifting you a free platform for your opinions is no more infringes 1A than SIG refusing me a free P365 infringes 2A.

Print publishers have (and always had) comment / letters to the editor sections. They often found it in their best interest to publish off-the-wall opinions, to expose them to scrutiny and rebuttal (as others have noted here), or possibly just to troll readers / stir up circulation. The fact that it does not represent their own work product was always clearly understood and did not magically appear with the internet. Conversely, this did not boundlessly oblige the publisher to print every word – any more than TTAG has a moral duty to provide spammers a platform (although they sure act like it sometimes).

Umm . . .

“I have yet to see any bad examples from actual ISPs.”

T-Mobile shadow banned certain links to stories that people were trying to share. I’m not sure if that was online or through texts, but they made it look like it was shared when it wasn’t.

As they are an ISP who do enjoy the privileges of a utility, I do support regulating the hell out of them.

I subscribe to PT Barnum’s dictum, and if one cannot get past being that “sucker” through experience, they will continue on in their ignorance, often into danger itself when taken to the extreme.

“I subscribe to PT Barnum’s dictum, …”

There is that to be considered.

Ah but BB gunz & pellet gunz ARE banned in Briton. Air gunz allowed with paltry pathetic power levels.No pointy knives. So this Brit was right about his hideous country…doing a wait n see twitter attitude. An elderly fakebook friend posted he just joined. Oh boy🙄

Aren’t air guns regulated like firearms in a couple of communist states, like NJ?

Crimson,

Yup. No BB guns in NJ without a permit. Flame throwers, however, are just fine. In fact, that is how my brother, down in south Jersey, grills dinner.

Illegal since the 1970s. Dad gave me a warning when we moved there in the late 70s, “Don’t let anyone see you with that.”…

Wow, that is sad, LifeSavor. I love BB guns for shooting the squirrels in my neck of the woods. What a pain in the butt it would be to have to have-I am sorry, get a permit-just for critter control.

our threshold is 700fps, need gun card for those.

They used to be in Michigan, but no longer the case. However, I do believe it continues to be illegal to have a suppressor/silencer on an air gun in michigan.

They are classified as category A firearms downunder.

Ridiculous I know. So my son uses real guns at the range instead.

I think they even require permits for bows and arrows, er, I meant, “missiles.”

Slingshots like the venerable ‘Wrist Rocket’ were illegal, as well, in NJ.

Didn’t stop me or my friends, we just didn’t let anyone see what we were doing…

A camera on ever corner now Geoff, the old grey mare ain’t what she used to be.

my jersey pal told me betta (siamese fighting fish) were illegal.

and everyone had b.ball bats.

possum,

I’ve heard that WristRocket slingshots are remarkably effective at disabling CCTV cameras. Or so I’m told . . . not that I would ever do that, myself. Private security cameras that monitor private property? Totally OK with that. Cameras that monitor public places? Yeah, those need to die a quick death. Starting with traffic cameras. It frightens me a little that so many in our culture are OK with busybody @$$holes monitoring their every move when they are in public . . . but that’s what idiots like dacian the stupid and MinorIQ are committed to. They want to control us “for our own good”.

Cool. But I am still not going to sign up.

I still have not heard a word that the British guy ever owned up to his error.

Well, darn it. Obviously somebody ought to make a law so those pesky Americans can’t get a BB gun without a permit!

PS, that British guy is about as likely to admit he is full of poop as their crown prince is to admit he was fooling around with teenage chicks all over the planet. Rule #1 in the British Empire, the British are never wrong. Rule #2, if they’re wrong, read Rule #1 again.

On the bright side with every narcissistic self-righteous choad playing tweet police there should be a lower overall volume of tweeting.

Nobody will ever look anything up or research beyond their personally approved source list so I trust this will be fundamentally useless at promoting reality.

@Geoff “I’m getting too old for this shit” PR”

The ‘Ministry of Truth’, indeed.

Watching that blow up will be *highly* entertaining… ”

Got that rainy day feeling that somehow such a development will be highly popular.

And the federal courts will declare that it can’t be abolished without legislation.

I will never understand why foreigners are proud of being barred from purchasing weapons.

“My country thinks I will either turn into a criminal or try and overthrow the government if I even touch a weapon. They have zero trust in my ability to act like a responsible adult; you should be so lucky!”

What a weird thing to be proud of.

“My country thinks I will either turn into a criminal or try and overthrow the government if I even touch a weapon. They have zero trust in my ability to act like a responsible adult; you should be so lucky!”

I’ve noticed that’s actually a pretty common attitude among the Left. I’ve actually heard them say things like –

“I could never own a gun, I’d go around shooting people!”

I respond to them – “You have no idea how happy I am that you *don’t* own a gun…”.

And slowly inch away from them… 🙂

my brit neighbor wants a gun. his wife said no. “because i’d shoot you with it.”

If the Bri-ish are overrun by the Russian army, I will personally donate a Daisy RedRyder to their cause. I know I’ve said eff them in the past, but I’ve been schooled in the error of my ways by Al(l)-bert Ha(i)ll… they’re on their own for ammo supply though. They can use the money they’ve saved by forgoing dental treatments.

Guys, this has nothing to do with the article. Apologies to the author. I’m planning a trip. I’ll be taking a small firearms battery. I have a couple of rifles, but my Holy Grail is a rifle Springfield Armory built back in the ’80s. A M-1 Tanker in .308 with a Beretta BM-59 folding stock. It should fit in the truck nicely and handle any trouble. If you have one you would part with, cash or trade, let me know. Just for the Feds. All laws would apply to any transaction.

it’s not a true m1a? what’s different?

good luck. looks to be couple large neighborhood. about half that for the stock. would be superb.

an abbreviated .308 is my fave. it’s what the mini thirty should be.

Excellent article Jennifer. Thank you

Sounds almost like they’re exploring the age old maxim, “The best response to bad speech is more speech.”

I just don’t trust them…

The main example here is dumb semantics, because you can in fact buy a rifle and ammo at Walmart.

The person who made the post (a non-resident alien) could not.

Not my Walmart. You can indeed buy some ammo from their sparsely stocked, locked glass cabinet if you can find someone with the key. But they don’t sell any firearms. And I live in Texas.

Was in a Walmart down near the Gulf last week. Walked past the gun counter. They had a couple of over priced Turkish shot guns and 1 Remington 870 , and a handful of .22 rifles. 2 bolt rifles of undetermined centerfire calibers. Didn’t get close enough to check the little info tags.

Ammo was in short supply though. A few boxes of shot shells, and a couple boxes of steel case crap for centerfire firearms. And a couple boxes of odd caliber brass cartridges. No rimfire, no 30cal anything, nothing anyone would use for hunting in this area. The handgun ammo shelf had nothing but dust on it.

One of our local Walmart’s had it’s FFL pulled long ago. They stopped selling ammo as well. Management at the time seem happy since they did not have to deal with the ‘hoarders’ anymore.

Last time I walked past the locked up ammo case in my local Walmart, there were several dozen packages of Bullet Weights inside. 🤣

Gotta keep everything with the word ‘bullet’ locked up, even if it’s only used to catch fish.

1. As others have pointed out, no he could not – he is not legally permitted to purchase a firearm.

2. If he DID purchase a firearm at Costco, he would have to go through THE SAME 4473/NICS process as every OTHER person who buys a firearm at Costco. Which he could not, so he would not be able to purchase.

3. Not saying there aren’t any, but I’ve never seen a Costco that sold actual firearms. Be cool if they did, though.

I am so glad we had a war to separate ourselves from the English tyrants. Anything they say about the United States should be reviewed as being suspect. They still can’t get over the fact that they lost the war.

Are you talking about the war with the Zulus,

which war? They’ve lost a lot of wars.

Interesting that you would mention the Zulus and the British. Because it’s not well-known but the British supplied guns to the Africans. In exchange for slaves and other items. However the Africans often complained that the Firearms were of poor quality. And The “washing of spears” was the worst defeat of the British military by the Zulus Army at Isandlwana,

Btw

The first use of machine guns in combat was not in WW1 France. It was in Africa I believe in the 1890’s. It was how the British finally defeated the Zulu military.

Twitter is what small birds do before a cat jumps on them.

Either a cat, or 1 of the local Red Tailed Hawks. A bunch of small birds were trying to mob a hawk this morning. Hawk turned quickly and grabbed a little bird and the rest of the small birds scattered and flew off. Kind of like what happens when someone actually posts a tweet that is honestly backed up with facts. The Leftists/Marxists/Progressives squawk and scatter.

@Umm . . .

If social media want immunity for their action/non-action regarding curation, then they should be regulated as a utility. As a utility, social media hosts cannot edit content. If social media sites want to edit usage, then they are publishers, subject to the results of their publication action/inaction.

The “social media are private companies, and they can do as they please” plays directly into the hands of the social media hosts who want absolute control over content, but immunity from results of that control.

Can’t have it both ways….but they do.

A utility is a very special case, sort of a mutually consensual step outside the free market. It is a monopoly or oligopoly (literally, not in the Marxist whiner or envious out-competed competitor sense). In exchange for a government-granted lock on a market (and all that goes with it, like the prerogative to clutter, block off, and/or dig up expanses of public property for its private profit) it relinquishes practically all discretion and becomes a quasi-governmental service (many, in fact, replacing, supplementing, or piggybacking off government services). A social media page bears zero resemblance to any of that.

As regards having it both ways, what are the “results” of a publisher letting some kookiness into their clearly delineated “not our product / not our position or ideas” sections; conversely, where is their boundless / non-discretionary obligation to do so? Do you believe TTAG (which hosts, say, 25-125 comments per article) has a duty to spammers, even if there are tens of thousands of them? Or do you, conversely, believe that they are (or should be) under constant scrutiny / threat of punishment for every over-the-top comment here?

“Do you believe TTAG (which hosts, say, 25-125 comments per article) has a duty to spammers, even if there are tens of thousands of them?”

If TTAG is a publisher, then TTAG is free to curate spam. If TTAG is not editing comment, thus not a publisher, then whatever shows up is posted, and TTAG is immune to lawsuits regarding content. As it is now, TTAG also gets to have it “both ways”.

The analogy between standard utilities, and social media, is to highlight that if a criminal (or criminal organization) uses utility-produced products when plotting crime, the utilities are not liable. The utility is not a publisher, organizer, nor facilitator of crime. In the same way, social media companies want the same immunity for what is on their websites, while participating the the activity hosted.

Neither is anyone else. Every time Biden’s PLCAA misrepresentation comes up, honest people point out that it only extends the same protection (i.e. against liability for criminal misuse) already enjoyed by everyone else.

And again, everyone else gets “both ways” for content that isn’t theirs.

Freedom of Speech is irrelevant. Twitter is a corporation, not a government entity. Corporations can do what they want and set the rules about who can speak and how or when. There is no protection under the Constitution to stop that. For those who missed civics: Freedom of Speech is a right of the People under the First Amendment of the US Constitution that bars THE GOVERNMENT from restricting speech—it has nothing to do with private actors like Twitter.

“…the First Amendment of the US Constitution that bars THE GOVERNMENT from restricting speech—it has nothing to do with private actors like Twitter.”

This is the argument the giant globalist corporations just love. And it is bogus.

When businesses become a de facto government, it cannot be possible that citizens are left entirely to the mercies of private companies. However, the issue is actually not the 1st amendment, but the federal (government?) law that provides legal immunity to private businesses based on content of communication, such that content editing is not considered “publishing”, and those private businesses do, indeed, edit content (publishing).

What we are seeing is de facto government use of private business to conduct business in a way that enormously advantages the government over the population. Section 230 of the Communications Decency Act is an act of the federal government to regulate speech. Government always regulates speech for “the good of the public”, i.e. protecting the populace from itself. Since the regulations “protect” the people, any opposition is, by default, harmful to the public.

Remove government de facto control over speech, and free speech emerges free of government interference. Remove the protection given to corporations for results of their actions, then private businesses can do as they please regarding what appears on their media outlets, while bearing the burden of risking law suits for their action/inaction.

There is a difference between something being legal and something being right. Social media has become the public forum of our era. The people using your argument are the same ones notorious for demonstrating on private property, shutting down public roads, destroying businesses & homes. All under the claim of “freedom of speech”. How is it suddenly different online?

These sites exist (supposedly) for social/public interaction. Restricting that to ONE ideology may be legal, but isn’t right.

fppf,

I think you are either extremely unaware of what has been happening around you or you’re in love with the current situation which means you’re a fan of fascism. The government is using Big Tech to control the flow of information and to censor their political rivals. The Puppet Administration has literally admitted to being in contact with them on a regular basis regarding “acceptable” speech. There are also plenty of former in Big Tech executives within the administration. “Acceptable” speech is anything that doesn’t contradict the left wing narrative.

In addition to being in direct contact with them, they will often just ask for speech control in the open. Big Tech obliges because it’s in their financial interest to be on the good side of government regulation. Did you notice how the government is suddenly threatening to regulate companies like twitter? Did you notice how the DOJ is suddenly investigating Musk? That’s how the game is played.

Lefties are fine with Big Government acting like the local mob boss as long as companies push left wing ideology. Lefties have zero regard for the Constitution. They just use it as an excuse (along with libertarian talking points-Build your own Twitter! Wait, no you can’t buy Twitter! Nooo!!) to push for speech control. It’s no different than the way they’re always quoting scripture when they think it backs them up, even though they despise Christianity.

fppf, I have news for you. Just like the radio and TV the internet is a means of communication and is regulated by the government. We have this thing called the First Amendment, which the Left has tried to control for the past 15 or so years.

Well, the tide has turned. The Left is being set back on its heels with court decisions, and the people’s revolt against their propaganda.

While Twitter is a “private company” they are still subject to the Constitution and it’s protections for the users.

Yes, and . . . no. Twitter, Google, Facebook, et al. lobbied HARD to get their Section 230 protections. Sure, they can “violate free speech rights”, because they are private companies. But they sure as HELL shouldn’t be allowed to do that under government protection, should they????

Interesting, but I’m still not going to make a twitter account. I was going to after companies began using twitter for their press releases, etc. I was only interested in one organization. Then, an industry blog I read began to report on their relevant tweets with a link to the tweet in the article. Of course, everyone does that now. So why would I bother with a time consuming account unless it was important enough to go directly to the source?

@Umm . . .

“Every time Biden’s PLCAA misrepresentation comes up, honest people point out that it only extends the same protection (i.e. against liability for criminal misuse) already enjoyed by everyone else.”

That is a message that is not “getting through”. People in the 2A defense movement report the truth, but politicians claiming to be 2A supporters are awfully quiet about it.

I agree, but my main point was the “everyone else” part.

“I agree, but my main point was the “everyone else” part.”

Understand. I was using your comment to hammer my thoughts more loudly. I think we are pretty much aligned.

𝐈 𝐦𝐚𝐤𝐞 𝐦𝐨𝐫𝐞 𝐭𝐡𝐞𝐧 $𝟏𝟐,𝟎𝟎𝟎 𝐚 𝐦𝐨𝐧𝐭𝐡 𝐨𝐧𝐥𝐢𝐧𝐞. 𝐈𝐭’𝐬 𝐞𝐧𝐨𝐮𝐠𝐡 𝐭𝐨 𝐜𝐨𝐦𝐟𝐨𝐫𝐭𝐚𝐛𝐥𝐲 𝐫𝐞𝐩𝐥𝐚𝐜𝐞 𝐦𝐲 𝐨𝐥𝐝 𝐣𝐨𝐛𝐬 𝐢𝐧𝐜𝐨𝐦𝐞, 𝐞𝐬𝐩𝐞𝐜𝐢𝐚𝐥𝐥𝐲 𝐜𝐨𝐧𝐬𝐢𝐝𝐞𝐫𝐢𝐧𝐠 𝐈 𝐨𝐧𝐥𝐲 𝐰𝐨𝐫𝐤 𝐚𝐛𝐨𝐮𝐭 𝟏𝟏 𝐭𝐨 𝟏𝟐 𝐡𝐨𝐮𝐫𝐬 𝐚 𝐰𝐞𝐞𝐤 𝐟𝐫𝐨𝐦 𝐡𝐨𝐦𝐞. 𝐈 𝐰𝐚𝐬 𝐚𝐦𝐚𝐳𝐞𝐝 𝐡𝐨𝐰 𝐞𝐚𝐬𝐲 𝐢𝐭 𝐰𝐚𝐬 𝐚𝐟𝐭𝐞𝐫 𝐈 𝐭𝐫𝐢𝐞𝐝 𝐢𝐭…𝐆𝐎𝐎𝐃 𝐋𝐔𝐂𝐊….

=====))> 𝐰𝐰𝐰.𝐰𝐨𝐫𝐤𝐬𝐜𝐥𝐢𝐜𝐤.𝐜𝐨𝐦

Did the UK guy not find the plane neck pillows? Literally every WalMart I have ever been in has had a universal plug adapter available.

From the very first comments I noticed that many did not read thd full story, had you done so you would learn that Twitter is NOT deleting any comments but adding additional information to the post and that new information has optional information checkpoints.

They are trying to prevent what happens when people use the block/ban and things devolve to segmented groupthink as so many other social networks or pages have become.

It might be a better idea then turning into a echo chamber.

Liked the article thanks!

Comments are closed.